Your Temporary tables databricks images are ready. Temporary tables databricks are a topic that is being searched for and liked by netizens now. You can Get the Temporary tables databricks files here. Find and Download all royalty-free images.

If you’re searching for temporary tables databricks images information related to the temporary tables databricks topic, you have visit the ideal site. Our website frequently gives you suggestions for refferencing the maximum quality video and image content, please kindly surf and locate more informative video content and images that fit your interests.

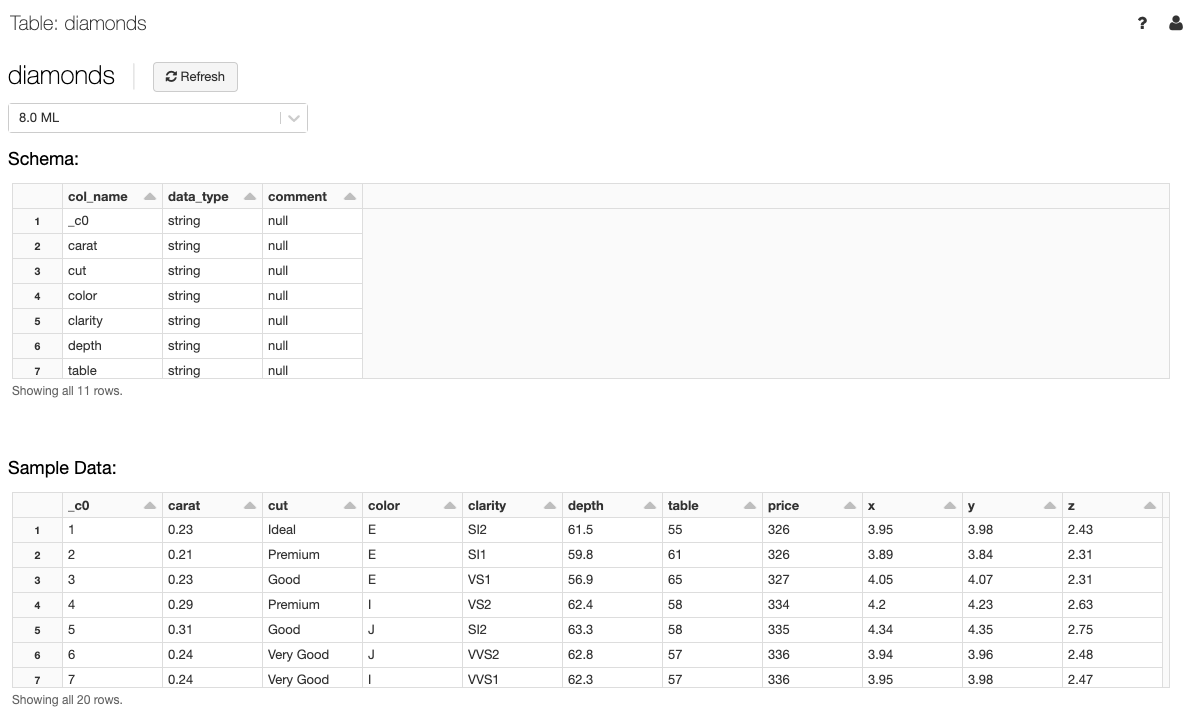

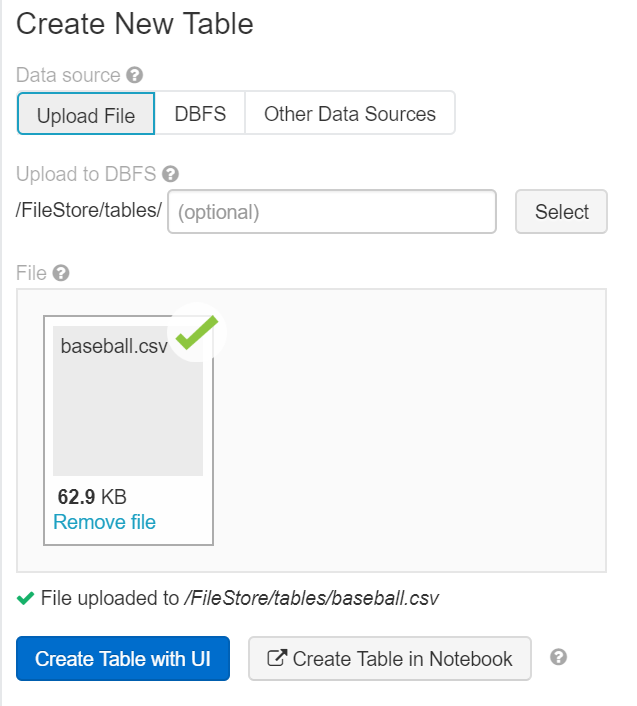

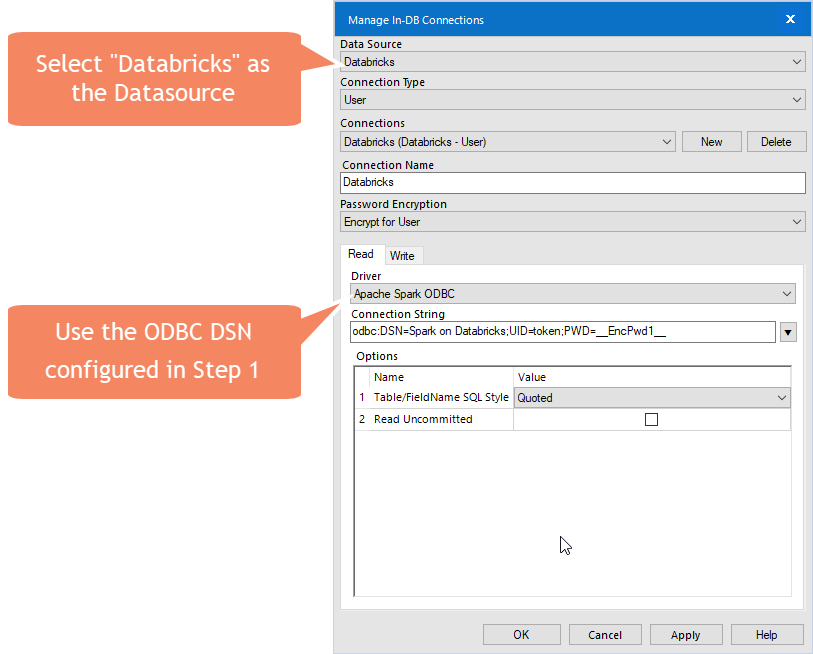

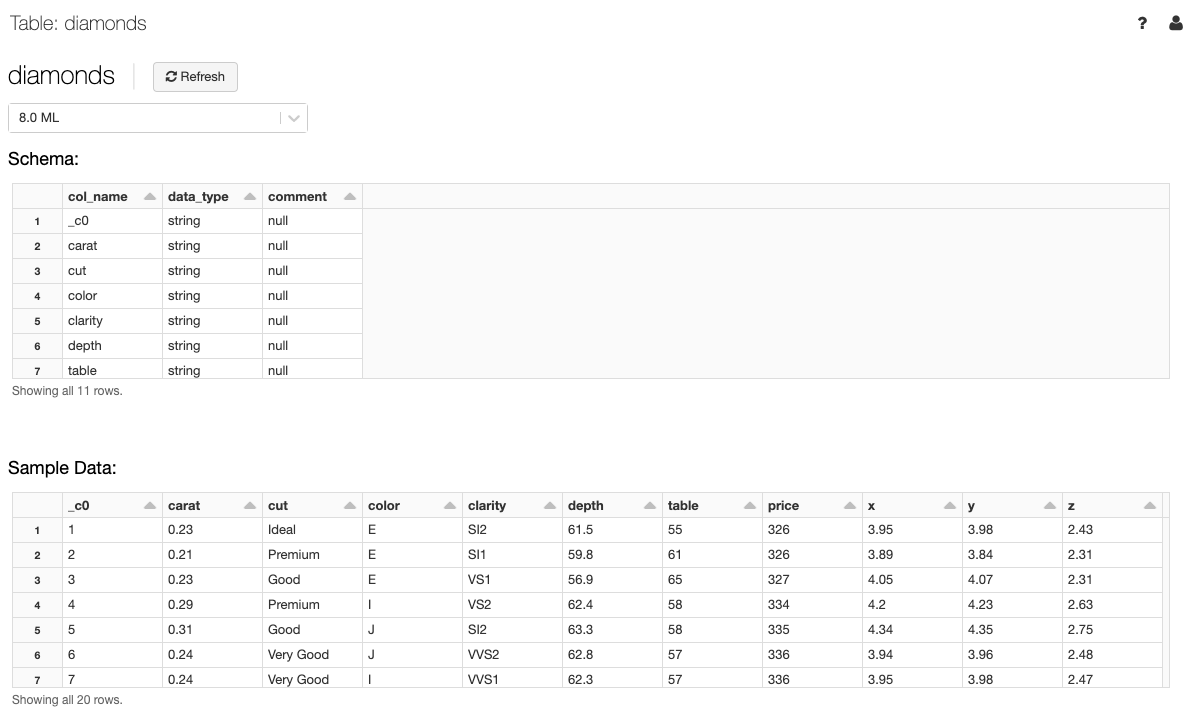

Temporary Tables Databricks. Inline table Databricks SQL A temporary table created using a VALUES clause. Python Create a temporary view or table from SPARK Dataframe temp_table_name temp_table dfcreateOrReplaceTempViewtemp_table_name Step 3 Creating Permanent SQL Table from SPARK Dataframe –Creating Permanent SQL Table from SPARK Dataframe permanent_table_name cdpperm_table dfwriteformatparquetsaveAsTablepermanent_table_name. Loc a l Table aka Temporary Table aka Temporary View. It can be of following formats.

Create Table Issue In Azure Databricks Microsoft Q A From docs.microsoft.com

Create Table Issue In Azure Databricks Microsoft Q A From docs.microsoft.com

This is also known as a temporary view. The data in temporary table is stored using Hives highly-optimized in-memory columnar format. The table or view name to be cached. Registering a temporary table using sparklyr in Databricks. A view name optionally qualified with a database name. Python Create a temporary view or table from SPARK Dataframe temp_table_name temp_table dfcreateOrReplaceTempViewtemp_table_name Step 3 Creating Permanent SQL Table from SPARK Dataframe –Creating Permanent SQL Table from SPARK Dataframe permanent_table_name cdpperm_table dfwriteformatparquetsaveAsTablepermanent_table_name.

GLOBAL TEMPORARY views are tied to a system preserved temporary database global_temp.

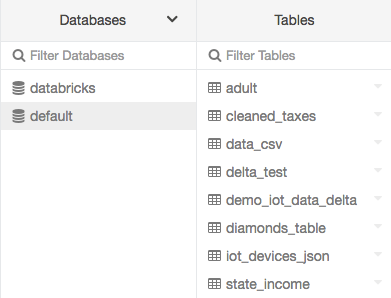

Creates a view if it does not exist. My colleague is using pyspark in Databricks and the usual step is to run an import using data sparkreadformat deltaparquet parquet_tableselect column1 column2 and then this caching step which is really fast. GLOBAL TEMPORARY views are tied to a system preserved temporary database global_temp. The table or view name to be cached. A table name optionally qualified with a database name. The created table always uses its own directory in the default warehouse location.

Source: docs.gcp.databricks.com

Source: docs.gcp.databricks.com

The registerTempTable method creates an in-memory table that is scoped to the cluster in which it was created. The table or view name may be optionally qualified with a. A local table is not accessible from other clusters and is not registered in the Hive metastore. The main unit of execution in Delta Live Tables is a pipeline. Create an external table.

Source: adaltas.com

Source: adaltas.com

Returns all the tables for an optionally specified database. The table or view name may be optionally qualified with a. If a query is cached then a temp view is created for this query. This allows us to use SQL tables as intermediate stores without worrying about what else is running in other clusters notebooks or jobs. The data in temporary table is stored using Hives highly-optimized in-memory columnar format.

Source: willvelida.medium.com

Source: willvelida.medium.com

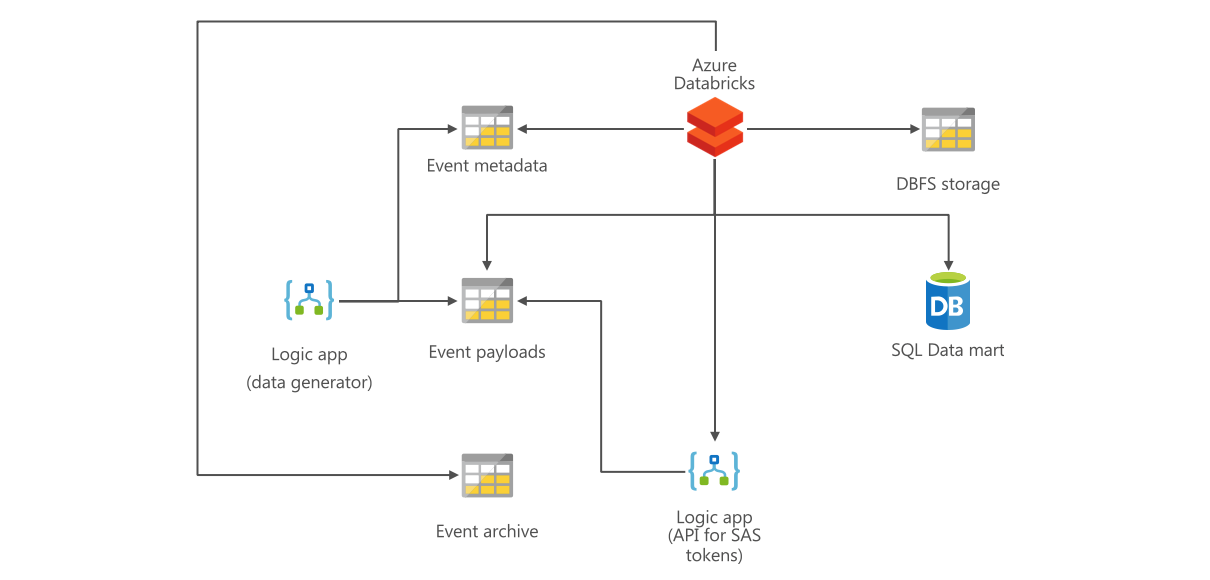

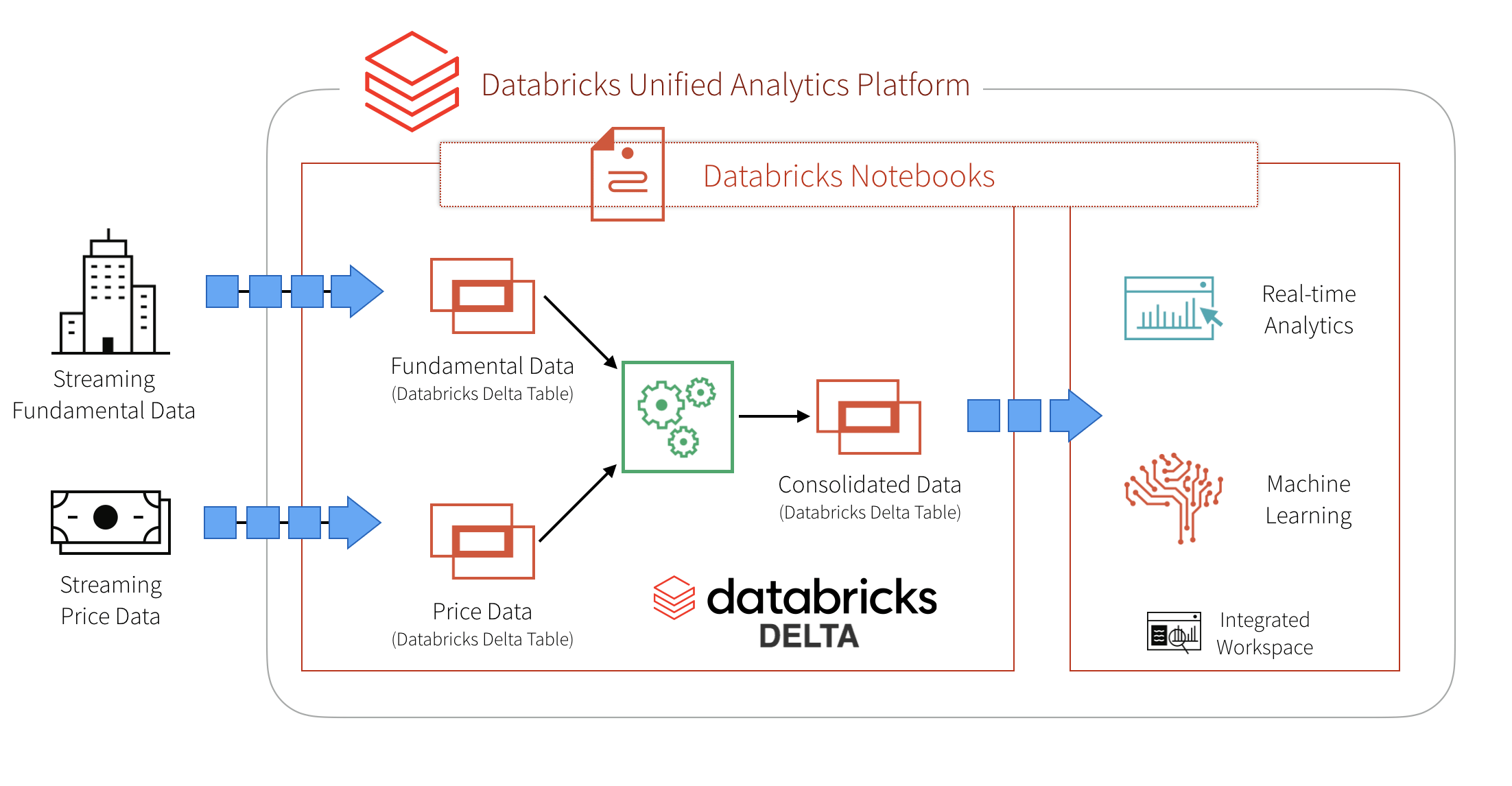

I was using Databricks Runtime 64 Apache Spark 245 Scala 211. A pipeline is composed of queries that transform data implemented as a directed acyclic graph DAG linking data sources to a data target optional data quality constraints and an associated configuration required to run the pipeline. CREATE TABLE IF NOT EXISTS db_nametable_name1 LIKE db_nametable_name2 LOCATION path Create a managed table using the definitionmetadata of an existing table or view. For details about Hive support see Apache Hive compatibility. As an R user I am looking for this registerTempTable.

Source: cloudarchitected.com

Source: cloudarchitected.com

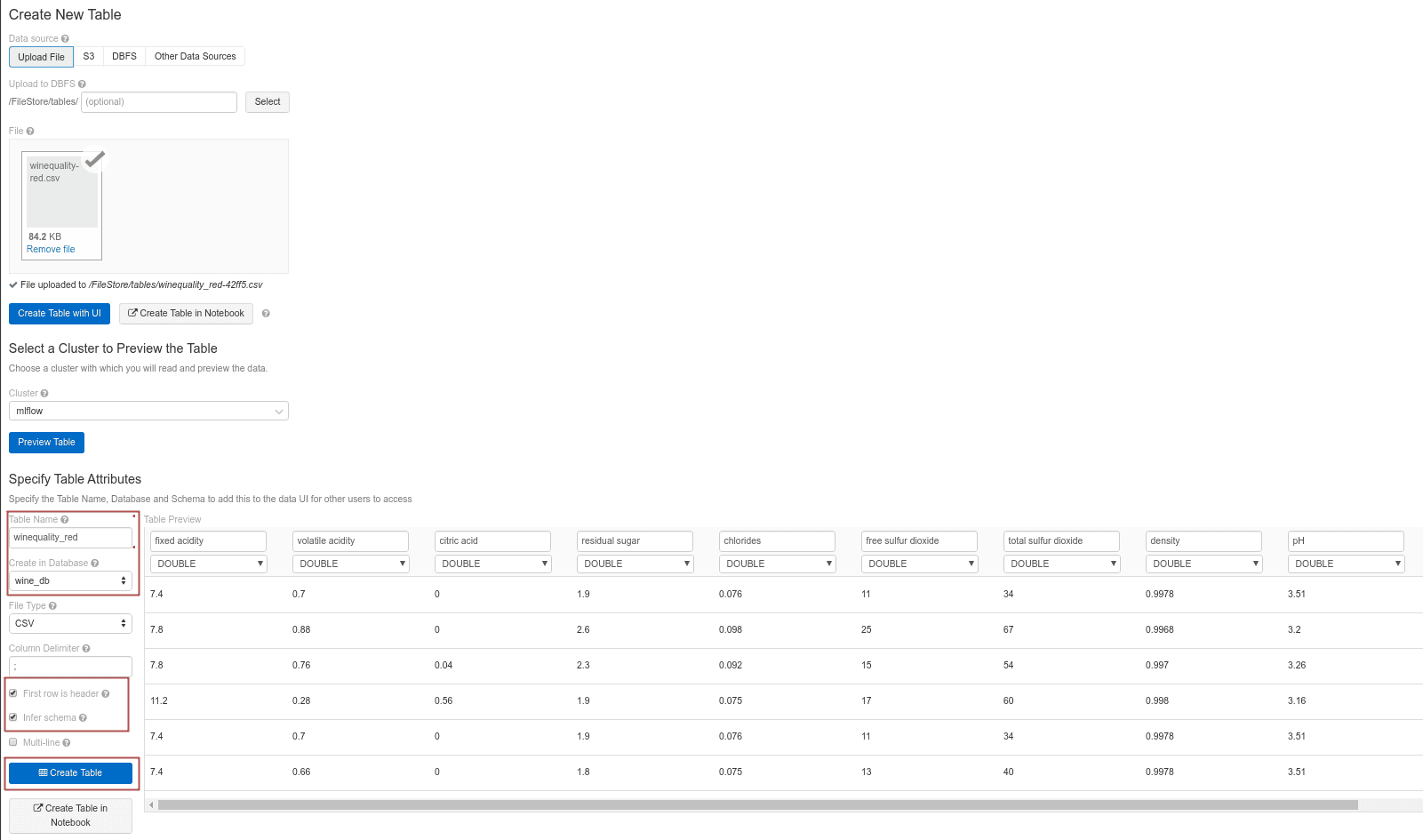

A local table is not accessible from other clusters and is not registered in the Hive metastore. Create Table Like. Registering a temporary table using sparklyr in Databricks. The notebook data_importipynb to import the wine dataset to Databricks and create a Delta Table. The created table always uses its own directory in the default warehouse location.

Source: sqlshack.com

Source: sqlshack.com

The table or view name may be optionally qualified with a. For details about Hive support see Apache Hive compatibility. GLOBAL TEMPORARY views are tied to a system preserved temporary database global_temp. This allows us to use SQL tables as intermediate stores without worrying about what else is running in other clusters notebooks or jobs. A local table is not accessible from other clusters or if using databricks notebook not in.

Source: forums.databricks.com

Source: forums.databricks.com

A pipeline is composed of queries that transform data implemented as a directed acyclic graph DAG linking data sources to a data target optional data quality constraints and an associated configuration required to run the pipeline. Delta Lake is already integrated in the runtime. Creates a view if it does not exist. Only cache the table when it is first used instead of immediately. A local table is not accessible from other clusters and is not registered in the Hive metastore.

Source: streamsets.com

Source: streamsets.com

Syntax CREATE OR REPLACE GLOBAL TEMPORARY VIEW IF NOT EXISTS view_identifier create_view_clauses AS query Parameters. The location of an existing Delta table. My colleague is using pyspark in Databricks and the usual step is to run an import using data sparkreadformat deltaparquet parquet_tableselect column1 column2 and then this caching step which is really fast. ALTER VIEW and DROP VIEW only change metadata. The registerTempTable method creates an in-memory table that is scoped to the cluster in which it was created.

Source: databricks.com

Source: databricks.com

Delta Live Tables has helped our teams save time and effort in managing data at the multi-trillion-record scale and continuously improving our AI engineering capabilityWith this capability augmenting the existing lakehouse architecture Databricks is disrupting the ETL and data warehouse markets which is important for companies like ours. I was using Databricks Runtime 64 Apache Spark 245 Scala 211. If no database is specified then the tables are returned from the current database. Loc a l Table aka Temporary Table aka Temporary View. CREATE TABLE IF NOT EXISTS db_nametable_name1 LIKE db_nametable_name2 LOCATION path Create a managed table using the definitionmetadata of an existing table or view.

Source: sqlshack.com

Source: sqlshack.com

This allows us to use SQL tables as intermediate stores without worrying about what else is running in other clusters notebooks or jobs. A local table is not accessible from other clusters and is not registered in the Hive metastore. The notebook data_importipynb to import the wine dataset to Databricks and create a Delta Table. If a view of same name already exists it is replaced. The registerTempTable method creates an in-memory table that is scoped to the cluster in which it was created.

Source: willvelida.medium.com

Source: willvelida.medium.com

This is also known as a temporary view. The table or view name to be cached. Azure Databricks registers global tables either to the Azure Databricks Hive metastore or to an external Hive metastore. A local table is not accessible from other clusters and is not registered in the Hive metastore. These clauses are optional and order insensitive.

Source: caiomsouza.medium.com

Source: caiomsouza.medium.com

I was using Databricks Runtime 64 Apache Spark 245 Scala 211. A Temporary Table also known as a Temporary View is similar to a table except that its only accessible within the Session where it was created. I was using Databricks Runtime 64 Apache Spark 245 Scala 211. Python Create a temporary view or table from SPARK Dataframe temp_table_name temp_table dfcreateOrReplaceTempViewtemp_table_name Step 3 Creating Permanent SQL Table from SPARK Dataframe –Creating Permanent SQL Table from SPARK Dataframe permanent_table_name cdpperm_table dfwriteformatparquetsaveAsTablepermanent_table_name. Syntax CREATE OR REPLACE GLOBAL TEMPORARY VIEW IF NOT EXISTS view_identifier create_view_clauses AS query Parameters.

Source: streamsets.com

Source: streamsets.com

Python Create a temporary view or table from SPARK Dataframe temp_table_name temp_table dfcreateOrReplaceTempViewtemp_table_name Step 3 Creating Permanent SQL Table from SPARK Dataframe –Creating Permanent SQL Table from SPARK Dataframe permanent_table_name cdpperm_table dfwriteformatparquetsaveAsTablepermanent_table_name. This is also known as a temporary view. Inline table Databricks SQL A temporary table created using a VALUES clause. I was using Databricks Runtime 64 Apache Spark 245 Scala 211. The table or view name to be cached.

Source: docs.databricks.com

Source: docs.databricks.com

ALTER VIEW and DROP VIEW only change metadata. You implement Delta Live Tables queries as SQL. Caches contents of a table or output of a query with the given storage level. Additionally the output of this statement may be filtered by an optional matching pattern. If a query is cached then a temp view is created for this query.

The registerTempTable method creates an in-memory table that is scoped to the cluster in which it was created. Only cache the table when it is first used instead of immediately. This allows us to use SQL tables as intermediate stores without worrying about what else is running in other clusters notebooks or jobs. The main unit of execution in Delta Live Tables is a pipeline. Create an external table.

Source: docs.databricks.com

Source: docs.databricks.com

Registering a temporary table using sparklyr in Databricks. The registerTempTable method creates an in-memory table that is scoped to the cluster in which it was created. Returns all the tables for an optionally specified database. Constructs a virtual table that has no physical data based on the result-set of a SQL query. Additionally the output of this statement may be filtered by an optional matching pattern.

Source: docs.microsoft.com

Source: docs.microsoft.com

A local table is not accessible from other clusters and is not registered in the Hive metastore. For details about Hive support see Apache Hive compatibility. This reduces scanning of. Loc a l Table aka Temporary Table aka Temporary View. Only cache the table when it is first used instead of immediately.

Source: docs.gcp.databricks.com

Source: docs.gcp.databricks.com

My colleague is using pyspark in Databricks and the usual step is to run an import using data sparkreadformat deltaparquet parquet_tableselect column1 column2 and then this caching step which is really fast. A local table is not accessible from other clusters and is not registered in the Hive metastore. CREATE TABLE IF NOT EXISTS db_nametable_name1 LIKE db_nametable_name2 LOCATION path Create a managed table using the definitionmetadata of an existing table or view. Constructs a virtual table that has no physical data based on the result-set of a SQL query. A view name optionally qualified with a database name.

Source: databricks.com

Source: databricks.com

If a query is cached then a temp view is created for this query. The created table always uses its own directory in the default warehouse location. If a query is cached then a temp view is created for this query. My colleague is using pyspark in Databricks and the usual step is to run an import using data sparkreadformat deltaparquet parquet_tableselect column1 column2 and then this caching step which is really fast. Registering a temporary table using sparklyr in Databricks.

This site is an open community for users to do submittion their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site serviceableness, please support us by sharing this posts to your own social media accounts like Facebook, Instagram and so on or you can also bookmark this blog page with the title temporary tables databricks by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.